The impact of Hyperlocal Market understanding on E-commerce

TABLE OF CONTENTS

“One morning, I decided to order the newly launched flavor of a cold brew via a Q-com app.

Interestingly, the product was Out of Stock at my home address in Gurgaon. However, upon reaching my office, just 4 km away from my home, half an hour later, I decided to give it another try and was surprised to find the same product was available at my office address.

As the E-commerce manager for this cold brew brand, I felt compelled to investigate the ROAS for the marketing ad campaigns I had initiated a week ago for this newly launched product. The figures were below our initial expectations. This situation raised a critical question: Could this gap in product availability impact my ROAS, potentially causing us to miss out on valuable sales opportunities and wasted marketing spends? Did we misalign demand offtake and product availability?”

This is not an isolated or one-off issue. Brands selling multiple products across various platforms in a hyper-competitive online market face similar challenges. To maintain their position, they prioritize and monitor key customer-facing metrics such as Share of Shelf, Share of Search, On-Shelf Availability (OSA), and trade spends.

However, the accuracy of the data is a concern. Accuracy requires granularity. However, current data collection processes rely on sampling pin codes, which limits representativeness and leads to inaccuracies in key metrics.

- Brands manually scrape data on a case-by-case basis and check metrics at the current point in time. For example, if there’s an issue with a particular SKU’s search ranking on Blinkit in Gurgaon, someone from the team will manually check the ranking for a few specific locations at that particular time.

- Alternatively, brands work with agencies or use tools that scrape data for a set number of pin codes. Yet, the data often ends up isolated in large CSV files, neglected in recipients' inboxes, and seldom utilized unless a significant crisis occurs. This makes it difficult to correlate this data with other performance metrics to determine cause and effect.

Do the current solutions truly enable better decision-making?

Limited granularity impacts decision-making

Current scraping processes operate at a sampled pin code level. They fail to capture the nuances of hyperlocal demand and supply dynamics, which is supercritical for building effective strategies and decision-making.

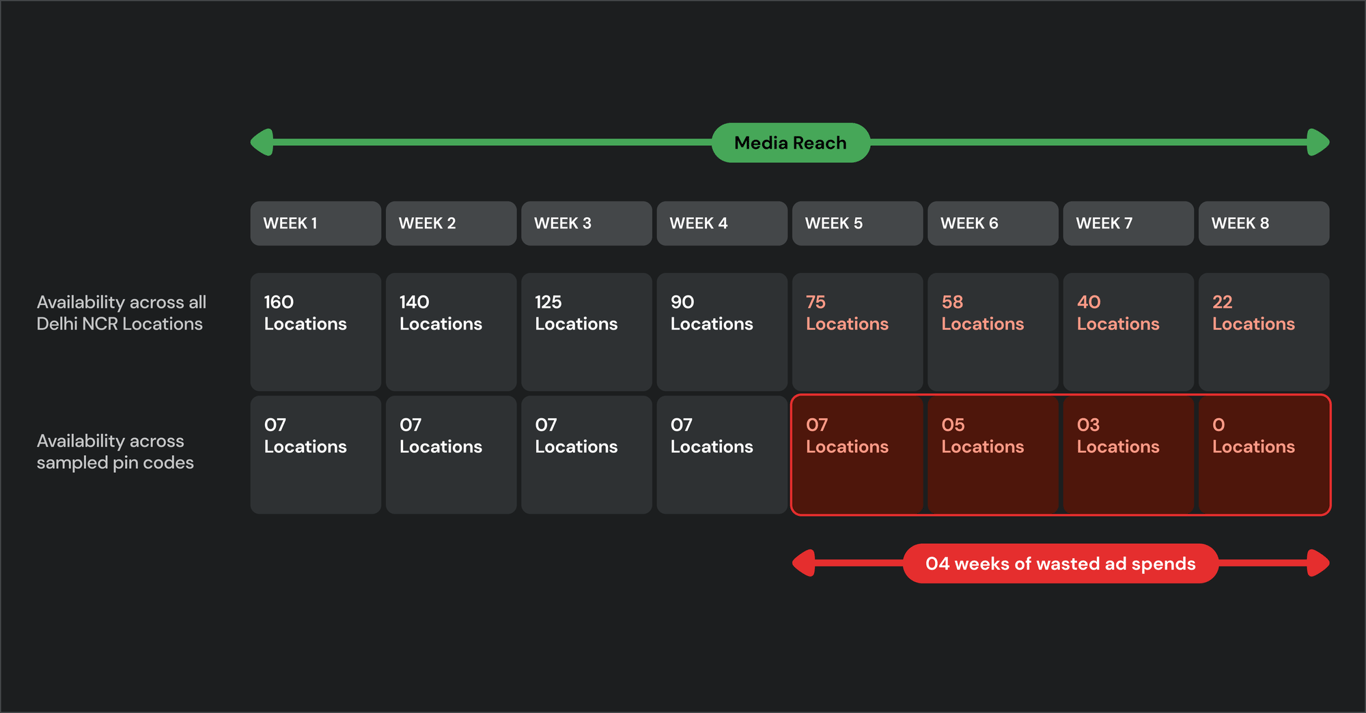

Let’s consider the impact of sampled On-Shelf Availability data on marketing ad campaign effectiveness.

A typical Q-com platform operates with 50 dark stores serving various locations within a region. By sampling a few locations to check SKU’s availability, the reach of the product across all of the city remains anyone's guess. What's even worse is that the sampled data gives a sense of misleading assurance when the real picture might be different. As a result, there is no accurate data available to determine whether a product's stock levels are suitable for the campaign promoting it or if scaling adjustments need to be made.

Without granular data for all online locations, brands fail to identify availability gaps across locations that impact their ROAS and lead to inefficient allocation of ad budgets.

Inability to identify and fix localized issues

Moreover, brands struggle to address localized challenges effectively due to the limited scope of their data. In turn, they resort to generalized actions that can negatively impact their market share, competitiveness, and growth.

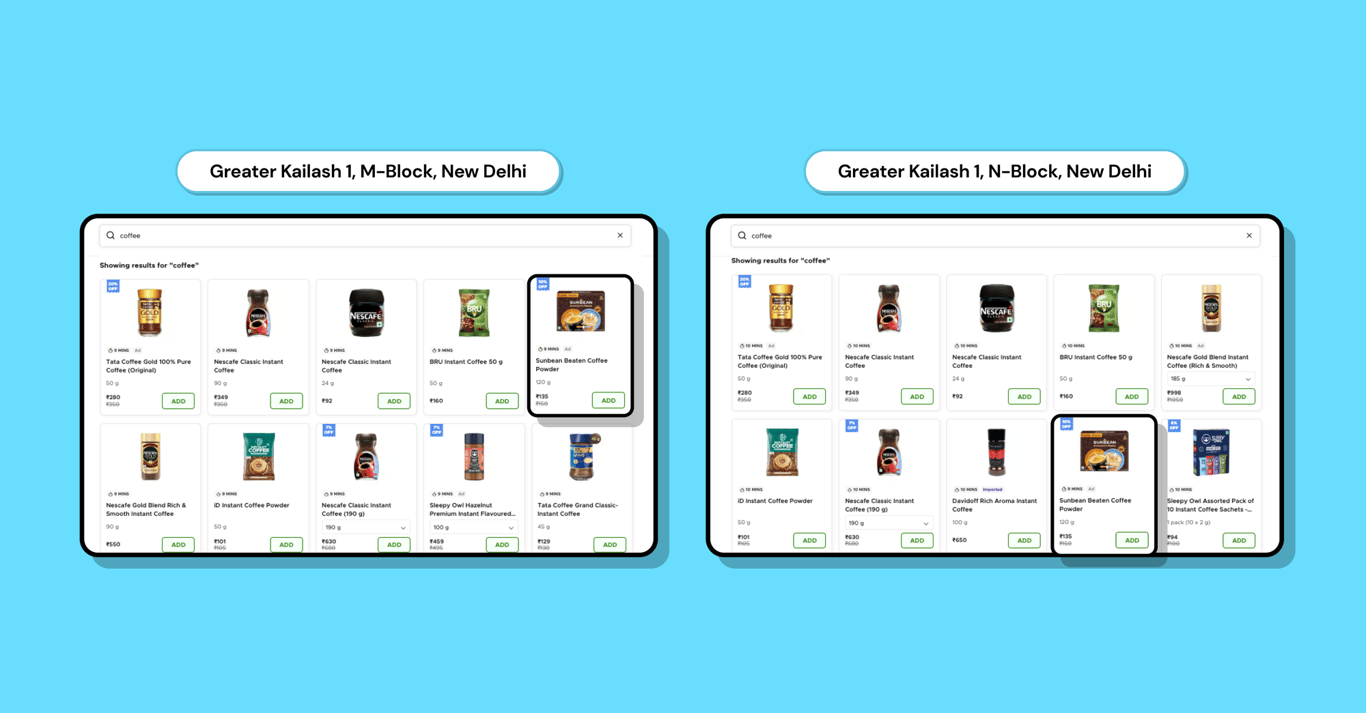

Using sampled share of search data to determine market share is a good example.

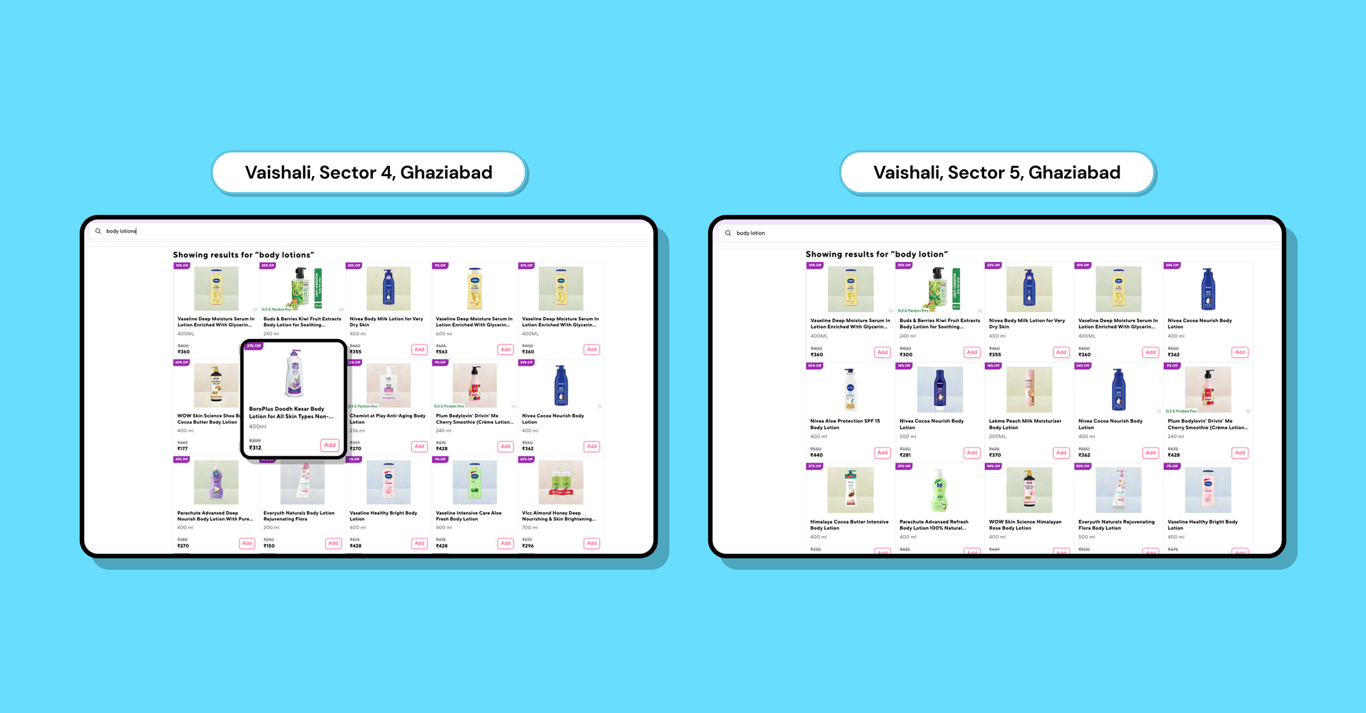

When a brand notices a decline in market share, they look at their share of search for insights. However, they often overlook the variation in search results among locations within the same region. For instance, two nearby places might have different search results for the same keyword, or the same products might be listed at different positions.

Without a comprehensive view of search performance taken across all locations, brands lack crucial insight into which store or location is contributing to the issue. This information gap makes it difficult to determine if issues such as unlisted products, out-of-stock items, assortment size, or competition intensity at specific locations are the cause.

Limited usability of scraped data

Furthermore, despite the high value of scraped data, it is confined to dashboards or CSVs, limiting its usability for business users. They are only leveraged as one-off cases rather than integrating it seamlessly into problem-solving scenarios or strategy building processes. Without complete triangulation between internal data, platform insights, and scraped data, critical questions remain unanswered, and decision-making is based on incomplete information or hunches.

High cost of scraping tools and adaptability to changes

The prevailing cost structure is influenced by the economics of platforms like Amazon, which operate at the pin-code level. Scraping data across multiple pin codes can incur substantial expenses for brands. As a result, they often restrict themselves to gathering data from sampled pin codes, creating potential gaps in insights.

Moreover, the dynamic nature of online platforms introduces another layer of complexity. Without a proactive understanding of the contracts and terms governing data scraping, brands risk losing valuable information and insights due to unforeseen changes in platform structures.

Introducing our purpose-built solution:Granularity at hyperlocal level with enriched data

Given the hyperlocal nature of commerce, particularly in the context of dark stores and quick commerce, brands must refine their strategies down to the last mile. At GobbleCube, we've engineered a cutting-edge system designed to deliver unparalleled insights for individual dark stores or fulfilment centers.

Our system scrapes data at the precise lat-long coordinates, ensuring that we capture nuanced insights tailored to the unique dynamics of each location. This includes data for the brand's own SKUs as well as competitors.

By enriching existing data with scraped data, we empower brands to analyze data comprehensively, derive actionable insights, and refine their strategies effectively.

Impact of enriched granular data on business decision making

Our solution's enriched granular data empowers business users to address critical questions regarding their business performance.

Optimizing ad spends

Access to Availability percentages across all online locations allows brands to allocate resources to areas where they can achieve the highest ROAS. Additionally, it mitigates the risk of potential customer dissatisfaction due to product unavailability.

Allocating trade spends strategically

GobbleCube provides Share of Search and Discounting data, both for the brand’s own products and those of competitors, so that brands have insights into which locations and SKUs are impacting their overall market share negatively. Brands can now allocate trade spends more strategically, counteracting competitors' tactics and maximizing their own competitiveness in those areas.

Informed decision making

By analyzing data in its entirety, including primary sales, offtake, availability, discounting, and visibility data, brands can make informed decisions and establish causal relationships between different metrics.

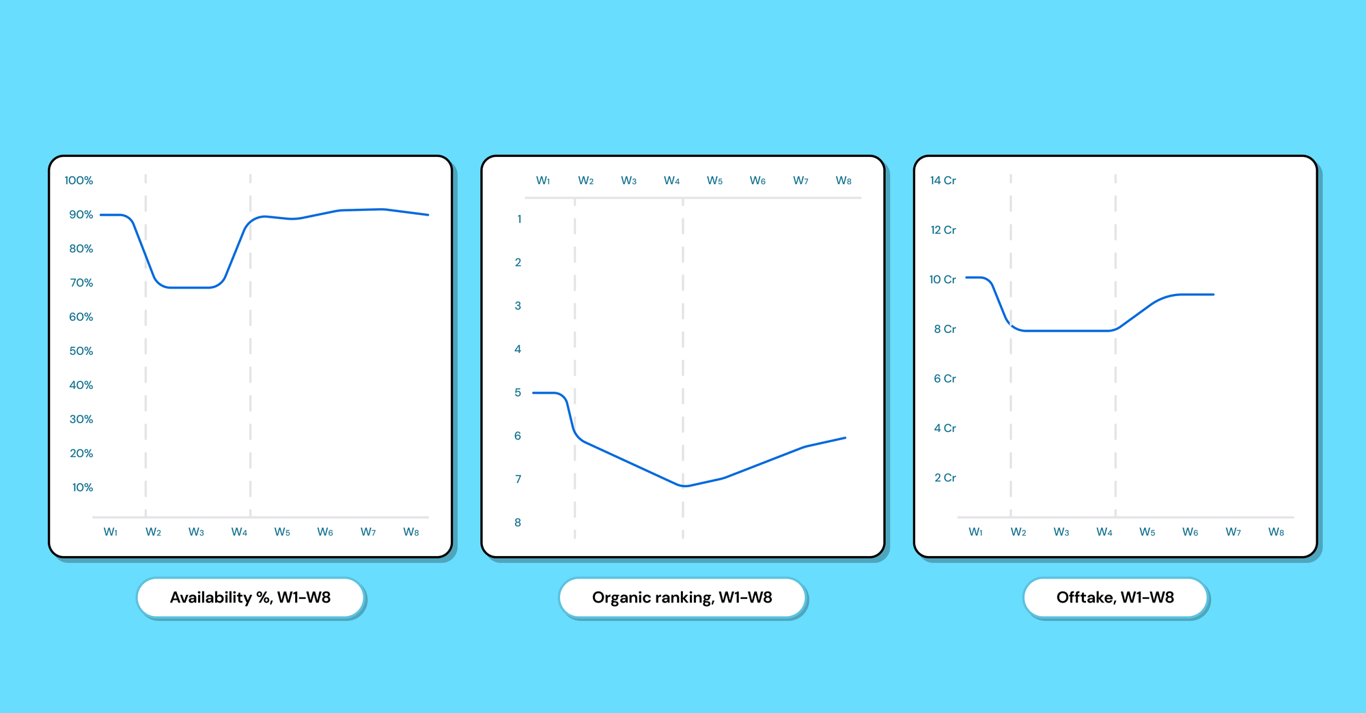

For instance, brands can track and establish correlations between product availability and organic rankings, as well as the long-term impact of availability on search and offtake.

As illustrated in the graph below, with a dip in availability, the organic ranking increased from 5 to 7. Even when the issue was fixed, the SKU continued to be on rank 7 for several more weeks. This understanding of trends allows brands to evaluate the overall effect on offtake, observing a dip that persisted for several weeks.

Cost-effectiveness and real-time adaptability

At GobbleCube, we do not offer scraping as a separate tool. It is part of our tech stack that enables brands to decode revenue by assimilating, contextualizing, and triangulating data together from multiple sources including marketplace platforms, market intelligence, internal ops, and finance tools to derive insights.

We prioritize real-time adaptability in scraping processes. We proactively monitor and respond to changes in response formats, platform structures, or any other dynamic elements to ensure continuity and accuracy in data even with platform changes.

If you're intrigued by the potential of advanced data scraping capabilities, please reach out to us and explore how GobbleCube can drive your business decision making.